这是一个由 Swift 社区驱动的仓库,用于与 OpenAI 公共 API 交互。

SwiftOpenAI 是一个由社区维护的、功能强大且易于使用的 Swift SDK。 它旨在与 OpenAI 的 API 无缝集成。 此 SDK 的主要目标是简化对 OpenAI 先进 AI 模型(例如 GPT-4、GPT-3 和未来的模型)的访问和交互,所有这些都在您的 Swift 应用程序中完成。

为了帮助开发者,我创建了这个演示应用。 你只需要在 SwiftOpenAI.plist 中添加你的 OpenAI API 密钥。 在演示应用程序中,你可以访问 SDK 的核心功能。

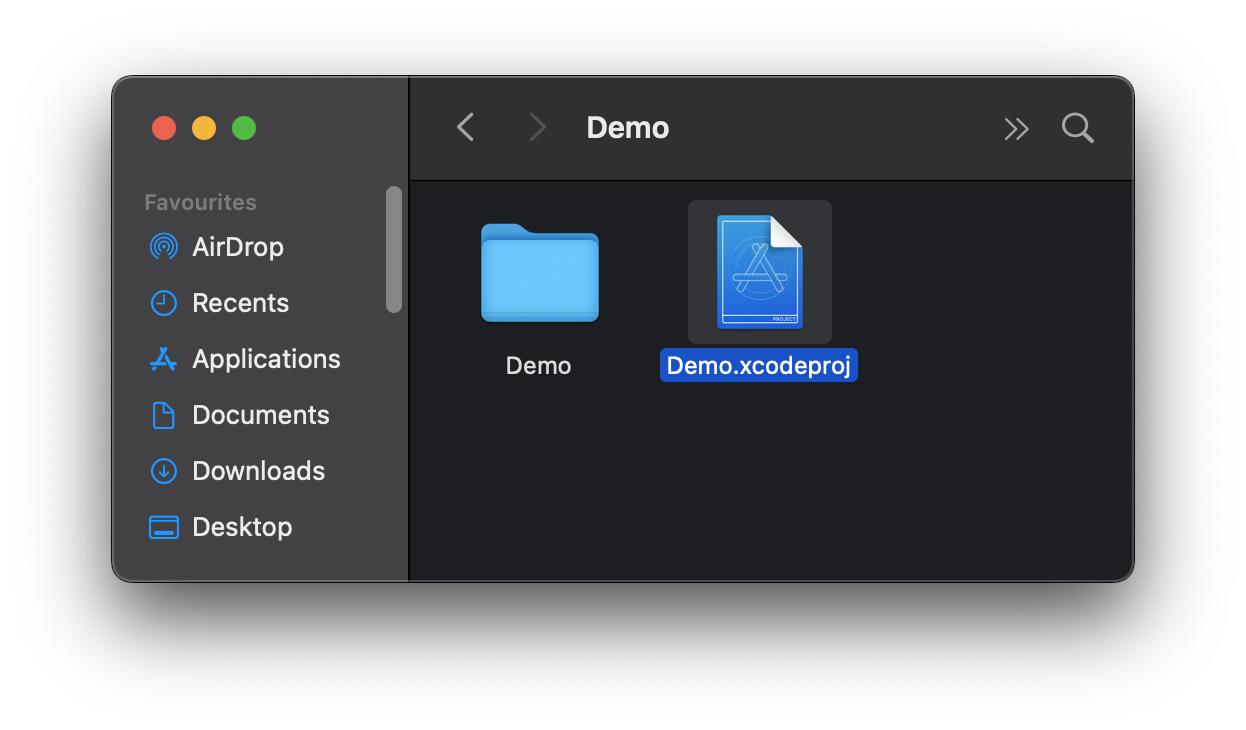

要尝试演示应用程序,你只需要打开 Demo.xcodeproj。

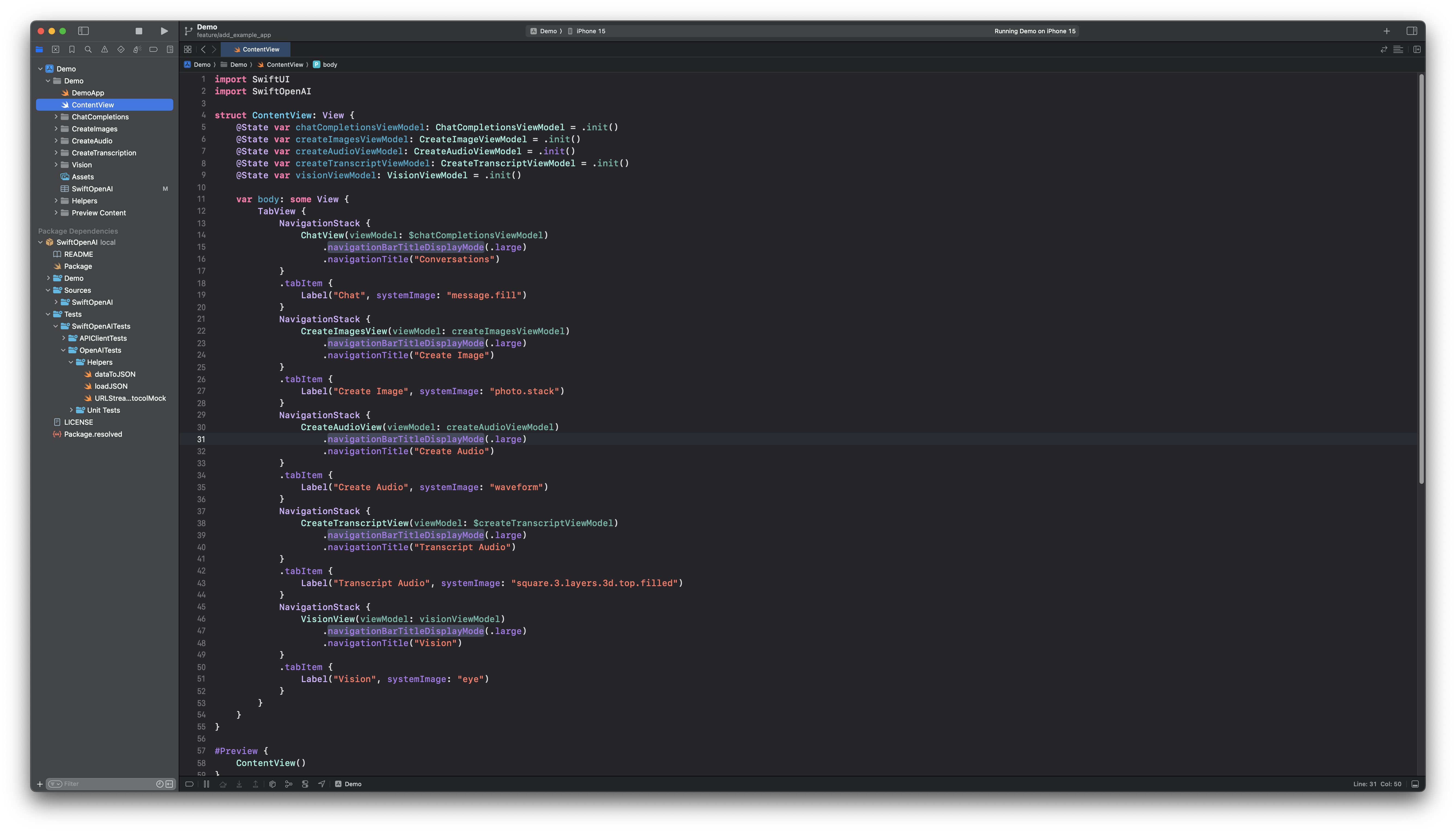

之后,你会看到项目。 下一步是在其中一个模拟器(或真机)上编译你的项目。

打开 Xcode 并打开“Swift Package Manager”部分,然后粘贴此存储库的 Github URL(复制 -> https://github.com/SwiftBeta/SwiftOpenAI.git)以在你的项目中安装 Package。

|

|

使用 SwiftOpenAI 简单直接。 按照以下步骤开始在你的 Swift 项目中与 OpenAI 的 API 交互

导入 SwiftOpenAIvar openAI = SwiftOpenAI(apiKey: "YOUR-API-KEY")

要安全地使用 SwiftOpenAI 而无需硬编码 API 密钥,请按照以下步骤操作

- 创建 .plist 文件:向你的项目添加一个新的 .plist 文件,例如 Config.plist。

- 添加你的 API 密钥:在 Config.plist 中,添加一个新行,键为 OpenAI_API_Key,并将你的 API 密钥粘贴到值字段中。

- 加载 API 密钥:使用以下代码从 .plist 加载你的 API 密钥

import Foundation

import SwiftOpenAI

// Define a struct to handle configuration settings.

struct Config {

// Static variable to access the OpenAI API key.

static var openAIKey: String {

get {

// Attempt to find the path of 'Config.plist' in the main bundle.

guard let filePath = Bundle.main.path(forResource: "Config", ofType: "plist") else {

// If the file is not found, crash with an error message.

fatalError("Couldn't find file 'Config.plist'.")

}

// Load the contents of the plist file into an NSDictionary.

let plist = NSDictionary(contentsOfFile: filePath)

// Attempt to retrieve the value for the 'OpenAI_API_Key' from the plist.

guard let value = plist?.object(forKey: "OpenAI_API_Key") as? String else {

// If the key is not found in the plist, crash with an error message.

fatalError("Couldn't find key 'OpenAI_API_Key' in 'Config.plist'.")

}

// Return the API key.

return value

}

}

}

// Initialize an instance of SwiftOpenAI with the API key retrieved from Config.

var openAI = SwiftOpenAI(apiKey: Config.openAIKey)

给定提示和/或输入图像,模型将使用 DALL·E 3 生成新图像。

do {

// Attempt to create an image using the OpenAI's DALL-E 3 model.

let image = try await openAI.createImages(

model: .dalle(.dalle3), // Specify the DALL-E 3 model.

prompt: prompt, // Use the provided prompt to guide image generation.

numberOfImages: 1, // Request a single image.

size: .s1024 // Specify the size of the generated image.

)

// Print the resulting image.

print(image)

} catch {

// Handle any errors that occur during image creation.

print("Error: \(error)")

}

给定原始图像和提示,创建编辑或扩展的图像。

do {

// Attempt to edit an image using OpenAI's DALL-E 2 model.

let modelType = OpenAIImageModelType.dalle(.dalle2) // Specify the DALL-E 2 model.

let imageData = yourImageData // Binary data of the image to be edited.

let maskData = yourMaskData // Binary data of the mask to be applied.

let promptText = "A futuristic cityscape." // Describe the desired modifications.

let numberOfImages = 3 // Request multiple edited image variations.

let imageSize: ImageSize = .s1024 // Specify the size of the generated images.

// Request the edited images and process them.

if let editedImages = try await openAI.editImage(

model: modelType,

imageData: imageData,

maskData: maskData,

prompt: promptText,

numberOfImages: numberOfImages,

size: imageSize

) {

print("Received edited images: \(editedImages)")

}

} catch {

// Handle any errors that occur during the image editing process.

print("Error editing image: \(error)")

}

使用 OpenAI API,利用 DALL·E 2,使用特定模型生成提供的图像的变体。

do {

// Attempt to create image variations using OpenAI's DALL-E 2 model.

let modelType = OpenAIImageModelType.dalle(.dalle2) // Specify the DALL-E 2 model.

let imageData = yourImageData // Binary data of the original image to be varied.

let numberOfImages = 5 // Request multiple image variations.

let imageSize: ImageSize = .s1024 // Specify the size of the generated images.

// Request the image variations and process them.

if let imageVariations = try await openAI.variationImage(

model: modelType,

imageData: imageData,

numberOfImages: numberOfImages,

size: imageSize

) {

print("Received image variations: \(imageVariations)")

}

} catch {

// Handle any errors that occur during the image variation creation process.

print("Error generating image variations: \(error)")

}

从输入文本生成音频。 你可以指定语音和 responseFormat。

do {

// Define the input text that will be converted to speech.

let input = "Hello, I'm SwiftBeta, a developer who in his free time tries to teach through his blog swiftbeta.com and his YouTube channel. Now I'm adding the OpenAI API to transform this text into audio"

// Generate audio from the input text using OpenAI's text-to-speech API.

let data = try await openAI.createSpeech(

model: .tts(.tts1), // Specify the text-to-speech model, here tts1.

input: input, // Provide the input text.

voice: .alloy, // Choose the voice type, here 'alloy'.

responseFormat: .mp3, // Set the audio response format as MP3.

speed: 1.0 // Set the speed of the speech. 1.0 is normal speed.

)

// Retrieve the file path in the document directory to save the audio file.

if let filePath = FileManager.default.urls(for: .documentDirectory, in: .userDomainMask).first?.appendingPathComponent("speech.mp3") {

do {

// Save the generated audio data to the specified file path.

try data.write(to: filePath)

// Confirm saving of the audio file with a print statement.

print("Audio file saved: \(filePath)")

} catch {

// Handle any errors encountered while writing the audio file.

print("Error saving Audio file: \(error)")

}

}

} catch {

// Handle any errors encountered during the audio creation process.

print(error.localizedDescription)

}

将音频转录为输入语言。

// Placeholder for the data from your video or audio file.

let fileData = // Data from your video, audio, etc.

// Specify the transcription model to be used, here 'whisper'.

let model: OpenAITranscriptionModelType = .whisper

do {

// Attempt to transcribe the audio using OpenAI's transcription service.

for try await newMessage in try await openAI.createTranscription(

model: model, // The specified transcription model.

file: fileData, // The audio data to be transcribed.

language: "en", // Set the language of the transcription to English.

prompt: "", // An optional prompt for guiding the transcription, if needed.

responseFormat: .mp3, // The format of the response. Note: Typically, transcription responses are in text format.

temperature: 1.0 // The creativity level of the transcription. A value of 1.0 promotes more creative interpretations.

) {

// Print each new transcribed message as it's received.

print("Received Transcription \(newMessage)")

// Update the UI on the main thread after receiving transcription.

await MainActor.run {

isLoading = false // Update loading state.

transcription = newMessage.text // Update the transcription text.

}

}

} catch {

// Handle any errors that occur during the transcription process.

print(error.localizedDescription)

}

将音频翻译成英文。

// Placeholder for the data from your video or audio file.

let fileData = // Data from your video, audio, etc.

// Specify the translation model to be used, here 'whisper'.

let model: OpenAITranscriptionModelType = .whisper

do {

for try await newMessage in try await openAI.createTranslation(

model: model, // The specified translation model.

file: fileData, // The audio data to be translated.

prompt: "", // An optional prompt for guiding the translation, if needed.

responseFormat: .mp3, // The format of the response. Note: Typically, translation responses are in text format.

temperature: 1.0 // The creativity level of the translation. A value of 1.0 promotes more creative interpretations.

) {

// Print each new translated message as it's received.

print("Received Translation \(newMessage)")

// Update the UI on the main thread after receiving translation.

await MainActor.run {

isLoading = false // Update loading state.

translation = newMessage.text // Update the translation text.

}

}

} catch {

// Handle any errors that occur during the translation process.

print(error.localizedDescription)

}

给定聊天对话,模型将返回聊天补全响应。

// Define an array of MessageChatGPT objects representing the conversation.

let messages: [MessageChatGPT] = [

// A system message to set the context or role of the assistant.

MessageChatGPT(text: "You are a helpful assistant.", role: .system),

// A user message asking a question.

MessageChatGPT(text: "Who won the world series in 2020?", role: .user)

]

// Define optional parameters for the chat completion request.

let optionalParameters = ChatCompletionsOptionalParameters(

temperature: 0.7, // Set the creativity level of the response.

stream: true, // Enable streaming to get responses as they are generated.

maxTokens: 50 // Limit the maximum number of tokens (words) in the response.

)

do {

// Create a chat completion stream using the OpenAI API.

let stream = try await openAI.createChatCompletionsStream(

model: .gpt4o(.base), // Specify the model, here GPT-4 base model.

messages: messages, // Provide the conversation messages.

optionalParameters: optionalParameters // Include the optional parameters.

)

// Iterate over each response received in the stream.

for try await response in stream {

// Print each response as it's received.

print(response)

}

} catch {

// Handle any errors encountered during the chat completion process.

print("Error: \(error)")

}

给定聊天对话,模型将返回聊天补全响应。

// Define an array of MessageChatGPT objects representing the conversation.

let messages: [MessageChatGPT] = [

// A system message to set the context or role of the assistant.

MessageChatGPT(text: "You are a helpful assistant.", role: .system),

// A user message asking a question.

MessageChatGPT(text: "Who won the world series in 2020?", role: .user)

]

// Define optional parameters for the chat completion request.

let optionalParameters = ChatCompletionsOptionalParameters(

temperature: 0.7, // Set the creativity level of the response.

maxTokens: 50 // Limit the maximum number of tokens (words) in the response.

)

do {

// Request chat completions from the OpenAI API.

let chatCompletions = try await openAI.createChatCompletions(

model: .gpt4(.base), // Specify the model, here GPT-4 base model.

messages: messages, // Provide the conversation messages.

optionalParameters: optionalParameters // Include the optional parameters.

)

// Print the received chat completions.

print(chatCompletions)

} catch {

// Handle any errors encountered during the chat completion process.

print("Error: \(error)")

}

给定带有图像输入的聊天对话,模型将返回聊天补全响应。

// Define a text message to be used in conjunction with an image.

let message = "What appears in the photo?"

// URL of the image to be analyzed.

let imageVisionURL = "https://upload.wikimedia.org/wikipedia/commons/thumb/5/57/M31bobo.jpg/640px-M31bobo.jpg"

do {

// Create a message object for chat completion with image input.

let myMessage = MessageChatImageInput(

text: message, // The text part of the message.

imageURL: imageVisionURL, // URL of the image to be included in the chat.

role: .user // The role assigned to the message, here 'user'.

)

// Define optional parameters for the chat completion request.

let optionalParameters: ChatCompletionsOptionalParameters = .init(

temperature: 0.5, // Set the creativity level of the response.

stop: ["stopstring"], // Define a stop string for the model to end responses.

stream: false, // Disable streaming to get complete responses at once.

maxTokens: 1200 // Limit the maximum number of tokens (words) in the response.

)

// Request chat completions from the OpenAI API with image input.

let result = try await openAI.createChatCompletionsWithImageInput(

model: .gpt4(.gpt_4_vision_preview), // Specify the model, here GPT-4 vision preview.

messages: [myMessage], // Provide the message with image input.

optionalParameters: optionalParameters // Include the optional parameters.

)

// Print the result of the chat completion.

print("Result \(result?.choices.first?.message)")

// Update the message with the content of the first response, if available.

self.message = result?.choices.first?.message.content ?? "No value"

// Update the loading state to false as the process completes.

self.isLoading = false

} catch {

// Handle any errors encountered during the chat completion process.

print("Error: \(error)")

}

列出并描述 API 中可用的各种模型。 你可以参考模型文档来了解哪些模型可用以及它们之间的差异。

do {

// Request a list of models available in the OpenAI API.

let modelList = try await openAI.listModels()

// Print the list of models received from the API.

print(modelList)

} catch {

// Handle any errors that occur during the request.

print("Error: \(error)")

}

获取给定输入的向量表示,该向量可以轻松地被机器学习模型和算法使用。

// Define the input text for which embeddings will be generated.

let inputText = "Embeddings are a numerical representation of text."

do {

// Attempt to generate embeddings using OpenAI's API.

let embeddings = try await openAI.embeddings(

model: .embedding(.text_embedding_ada_002), // Specify the embedding model, here 'text_embedding_ada_002'.

input: inputText // Provide the input text for which embeddings are needed.

)

// Print the embeddings generated from the input text.

print(embeddings)

} catch {

// Handle any errors that occur during the embeddings generation process.

print("Error: \(error)")

}

给定输入文本,输出模型是否将其归类为违反 OpenAI 的内容策略。

// Define the text input to be checked for potentially harmful or explicit content.

let inputText = "Some potentially harmful or explicit content."

do {

// Request moderation on the input text using OpenAI's API.

let moderationResults = try await openAI.moderations(

input: inputText // Provide the text input for moderation.

)

// Print the results of the moderation.

print(moderationResults)

} catch {

// Handle any errors that occur during the moderation process.

print("Error: \(error)")

}

给定提示,模型将返回一个或多个预测的补全,并且还可以返回每个位置的替代标记的概率。

// Define a prompt for generating text completions.

let prompt = "Once upon a time, in a land far, far away,"

// Set optional parameters for the completion request.

let optionalParameters = CompletionsOptionalParameters(

prompt: prompt, // The initial text prompt to guide the model.

maxTokens: 50, // Limit the maximum number of tokens (words) in the completion.

temperature: 0.7, // Set the creativity level of the response.

n: 1 // Number of completions to generate.

)

do {

// Request text completions from OpenAI using the GPT-3.5 model.

let completions = try await openAI.completions(

model: .gpt3_5(.turbo), // Specify the model, here GPT-3.5 turbo.

optionalParameters: optionalParameters // Include the optional parameters.

)

// Print the generated completions.

print(completions)

} catch {

// Handle any errors encountered during the completion generation process.

print("Error: \(error)")

}

这里有一个使用 SwiftUI 的完整示例

createChatCompletions 方法允许你通过生成基于聊天的补全与 OpenAI API 进行交互。 提供必要的参数来自定义补全,例如模型、消息和其他可选设置。

import SwiftUI

import SwiftOpenAI

struct ContentView: View {

var openAI = SwiftOpenAI(apiKey: "YOUR-API-KEY")

var body: some View {

VStack {

Image(systemName: "globe")

.imageScale(.large)

.foregroundColor(.accentColor)

Text("Hello, world!")

}

.padding()

.onAppear {

Task {

do {

for try await newMessage in try await openAI.createChatCompletionsStream(model: .gpt3_5(.turbo),

messages: [.init(text: "Generate the Hello World in Swift for me", role: .user)],

optionalParameters: .init(stream: true)) {

print("New Message Received: \(newMessage) ")

}

} catch {

print(error)

}

}

}

}

}

createChatCompletions 方法允许你通过生成基于聊天的补全与 OpenAI API 进行交互。 提供必要的参数来自定义补全,例如模型、消息和其他可选设置。

import SwiftUI

import SwiftOpenAI

struct ContentView: View {

var openAI = SwiftOpenAI(apiKey: "YOUR-API-KEY")

var body: some View {

VStack {

Image(systemName: "globe")

.imageScale(.large)

.foregroundColor(.accentColor)

Text("Hello, world!")

}

.padding()

.onAppear {

Task {

do {

let result = try await openAI.createChatCompletions(model: .gpt3_5(.turbo),

messages: [.init(text: "Generate the Hello World in Swift for me", role: .user)])

print(result)

} catch {

print(error)

}

}

}

}

}

MIT 许可证

版权所有 2023 SwiftBeta

特此授予任何获得本软件副本和相关文档文件(“软件”)的人员免费许可,以不受限制地处理本软件,包括但不限于使用、复制、修改、合并、发布、分发、再许可和/或销售本软件的副本,并允许向其提供本软件的人员这样做,但须符合以下条件:

上述版权声明和本许可声明应包含在本软件的所有副本或主要部分中。

本软件按“原样”提供,不提供任何形式的明示或暗示的保证,包括但不限于适销性、特定用途的适用性和不侵权。 在任何情况下,作者或版权所有者均不对任何索赔、损害或其他责任负责,无论是在合同、侵权或其他方面,由软件或软件的使用或其他交易引起、产生或与之相关。